High-speed, Aggressive 6DoF Trajectories for State Estimation and Drone Racing

News

| May 2nd 2024 | We have a new competition! The competition is hosted at the RSS 24 workshop on Safety Autonomy. |

| February 9th 2024 | We have a new Leader Board! We extended the test dataset including the sequences in the SplitS track. |

| December 1st 2023 | Added new sequences in a SplitS track with peak speeds up to 100 km/h. Check our Nature paper for details. |

| February 2nd 2022 | New ground truth is available. Leader board has been updated. |

| October 26th 2020 | The results from our IROS 2020 competition are public. |

About

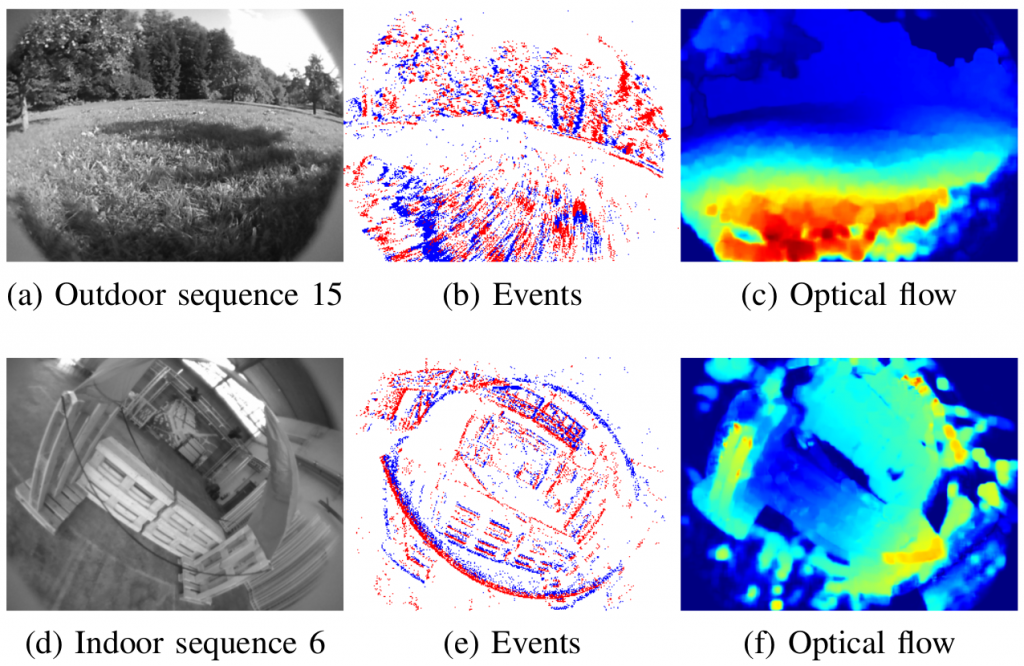

We introduce the UZH-FPV Drone Racing dataset, which is the most aggressive visual-inertial odometry dataset to date. Large accelerations, rotations, and apparent motion in vision sensors make aggressive trajectories difficult for state estimation. However, many compelling applications, such as autonomous drone racing, require high-speed state estimation, but existing datasets do not address this. These sequences were recorded with a first-person-view (FPV) drone racing quadrotor fitted with sensors and flown aggressively by an expert pilot. The trajectories include fast laps around a racetrack with drone racing gates, as well as free-form trajectories around obstacles, both indoor and out. We present the camera images and IMU data from a Qualcomm Snapdragon Flight board, ground truth from a Leica Nova MS60 laser tracker, as well as event data from an mDAVIS 346 event camera, and high-resolution RGB images from the pilot’s FPV camera. With this dataset, our goal is to help advance the state of the art in high-speed state estimation.

Publication

When using this work in an academic context, please cite the following publications:

Publication accompanying the Dataset

Are We Ready for Autonomous Drone Racing? The UZH-FPV Drone Racing Dataset

IEEE International Conference on Robotics and Automation (ICRA), 2019.

@InProceedings{Delmerico19icra,

author = {Jeffrey Delmerico and Titus Cieslewski and Henri Rebecq and Matthias Faessler and Davide Scaramuzza},

title = {Are We Ready for Autonomous Drone Racing? The {UZH-FPV} Drone Racing Dataset},

booktitle = {{IEEE} Int. Conf. Robot. Autom. ({ICRA})},

year = 2019

}Publication containing the approach used to generate the ground truth

Continuous-Time vs. Discrete-Time Vision-based SLAM: A Comparative Study

Robotics and Automation Letters (RA-L), 2022.

@article{cioffi2022continuous,

title={Continuous-Time vs. Discrete-Time Vision-based SLAM: A Comparative Study},

author={Cioffi, Giovanni and Cieslewski, Titus and Scaramuzza, Davide},

journal={{IEEE} Robotics and Automation Letters, ({RA-L})},

year={2022},

publisher={IEEE}

}Publication containing the approach used to record the sequence in the SplitS track

Champion-level Drone Racing using Deep Reinforcement Learning

Nature, 2023.

@article{kaufmann2023champion,

title={Champion-level drone racing using deep reinforcement learning},

author={Kaufmann, Elia and Bauersfeld, Leonard and Loquercio, Antonio and M{\"u}ller, Matthias and Koltun, Vladlen and Scaramuzza, Davide},

journal={Nature},

volume={620},

number={7976},

pages={982--987},

year={2023},

publisher={Nature Publishing Group UK London}

}License

This datasets are released under the Creative Commons license (CC BY-NC-SA 3.0), which is free for non-commercial use (including research).

Acknowledgments

This work was supported by the National Centre of Competence in Research Robotics (NCCR) through the Swiss National Science Foundation, the SNSF-ERC Starting Grant, and the DARPA FLA Program.

This work would not have been possible without the assistance of Stefan Gächter, Zoltan Török, and Thomas Mörwald of Leica Geosystems and their support in gathering our data. Additional thanks go to Innovation Park Zürich, and the Fässler family for providing experimental space, iniVation AG and Prof. Tobi Delbruck for their support and guidance with the mDAVIS sensors, and Hanspeter Kunz from the Department of Informatics at the University of Zurich for his support with setting up this website.